In the ever-evolving field of artificial intelligence, the quality of prompts used in AI-powered applications plays an important role. A well-crafted prompt can significantly enhance the effectiveness of an AI model, while a poorly constructed one can lead to suboptimal results. Recognizing this challenge, Anthropic has introduced new features in the Anthropic Console designed to simplify and improve the process of prompt generation, testing, and evaluation.

The latest enhancements to the Anthropic Console enable developers to easily generate, test, and evaluate prompts, leveraging features like automatic test case generation and output comparison to maximize Claude’s AI capabilities for optimal responses.

Anthropic’s update makes prompt creation easier with a built-in generator powered by Claude 3.5 Sonnet, allowing developers to describe tasks (e.g. “Triage inbound customer support requests”) and receive high-quality prompts quickly, reducing complexity and time investment.

Testing with Automatic Test Cases

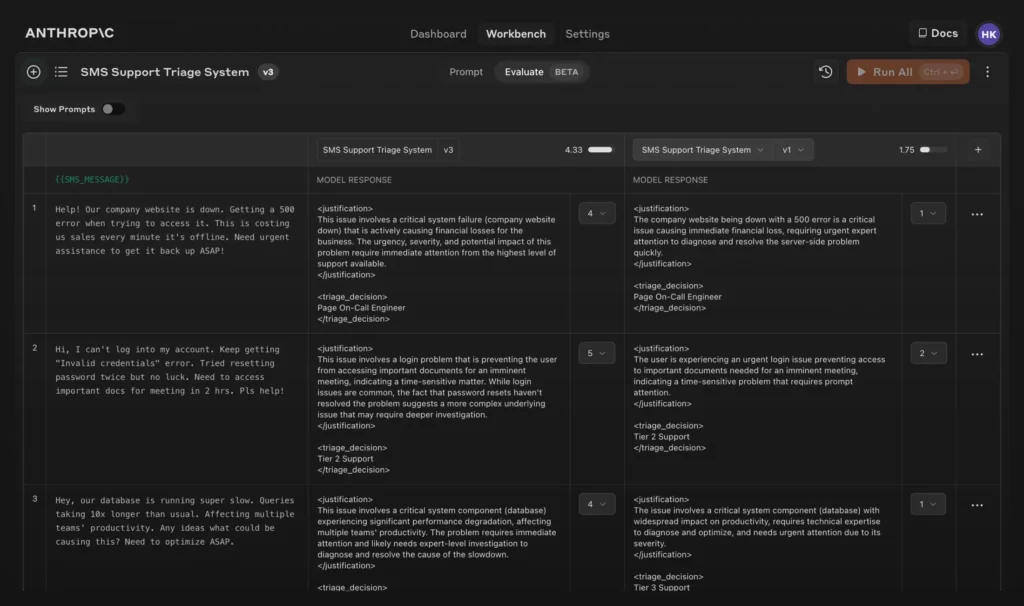

Testing prompts is crucial in ensuring their quality and reliability before deployment. Anthropic’s new Evaluate feature allows developers to test prompts against a variety of real-world inputs directly within the Console, eliminating the need for manual test management. Users can manually add or import test cases from a CSV file or utilize Claude’s ‘Generate Test Case’ feature for automatic test case creation. This streamlined approach helps developers build confidence in their prompts’ performance across diverse scenarios.

Iterative Improvement and Comparison Tools

Refinement of prompts has been made more efficient with the ability to create new versions and re-run test suites quickly. Anthropic has also introduced a comparison mode, enabling developers to evaluate the outputs of two or more prompts side by side. This feature, coupled with the option to have subject matter experts grade response quality on a 5-point scale, allows for precise and rapid improvements in prompt quality.

These enhancements represent a significant advancement in AI development tools, providing a faster, more accessible way for developers to create, test, and refine prompts. By integrating these features into the Anthropic Console, Anthropic is helping developers produce high-quality prompts that enhance the performance and reliability of AI models.

Anthropic’s latest features in the Anthropic Console are poised to revolutionize the way developers approach prompt generation and testing. By simplifying these processes and providing robust tools for refinement, Anthropic is empowering developers to create more effective AI applications.

Opus vs Sonnet vs Haiku: Check Key Differences Between Models Of Anthropic Claude 3