Lots of you are probably looking for the best AI writing tool. Right now, ChatGPT is the favourite, with over 1 billion users. LLaMA comes in second, and Gemini is third with 275 million users. DeepSeek is in fourth place, having around 61.81 million users.

But did this help you in knowing which AI tool is perfect for your tailored needs? No, maybe?

Each AI tool mentioned above has its own strengths and limitations. If ChatGPT is great for writing in a natural and chatty way, then LLaMA is really flexible since it’s open-source.

It’s difficult for anyone to pinpoint which AI is truly the best, because “best” depends entirely on what you’re looking for.

Whether it’s creativity, technical accuracy, speed, integration, or open-source flexibility, each AI brings something unique to the table. Ready to get an in-depth comparison of these AI writing tools? Let’s get started!

Comparing the Strengths of LLaMA 4, DeepSeek, Gemini, and ChatGPT

Large language models are changing fast, and now we have players like Meta’s Llama 4, DeepSeek, Google’s Gemini, and OpenAI’s ChatGPT all trying to take the lead. Each model has its own features and strengths, making them good for different uses.

Llama 4 (Meta)

Meta’s LLaMA 4 marks a major advancement in open-source AI, offering three distinct variants:

Scout:

- 17B active parameters

- 10M-token context window

- Optimised for long-context tasks

Maverick:

- 402B total parameters (17B active)

- Excels in multimodal reasoning

Behemoth (in training):

- 1.9T total parameters (288B active)

- Tailored for STEM applications

Architecture:

- Uses Mixture-of-Experts (MoE), activating specialised sub-networks per query

- Balances efficiency with high performance

Training Data:

- Trained on 30T tokens, including images and videos

- Enables strong multimodal capabilities

DeepSeek

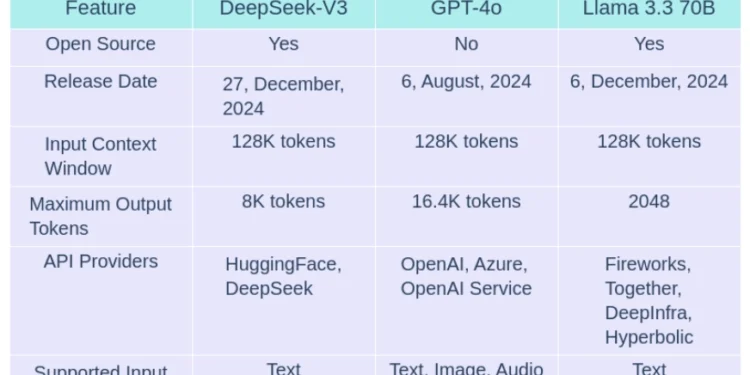

Developed by a Chinese startup, DeepSeek focuses on technical precision and coding proficiency with lower computational costs.

Key Versions:

DeepSeek-V3-0324:

- 32B parameters

- Outperforms larger models like LLaMA 4 Maverick in coding benchmarks

DeepSeek-R1:

- Optimised for step-by-step reasoning and mathematical tasks

Efficiency:

- Trained on technical datasets

- Achieves coding scores rivalling GPT-4.5 with far fewer parameters

Gemini (Google)

Google’s Gemini Family focuses on real-time processing and ecosystem integration.

Key Versions:

Gemini 2.0/2.5 Pro:

- Multimodal models processing text, images, and audio simultaneously

Gemini Flash-Lite:

- Cost-effective variant for scalable deployments

Strengths:

- Leverages Google’s search data and user interactions to improve contextual awareness

- Excels in multilingual applications and rapid information retrieval

ChatGPT (OpenAI)

OpenAI’s GPT-4.5 and GPT-4o are benchmarks for versatility and creative tasks.

Key Versions:

GPT-4.5:

- Outperforms LLaMA 4 in reasoning and knowledge-based benchmarks

GPT-4o:

- Optimised for conversational depth and tool integration

Strengths of ChatGPT:

- 1.8T parameters

- Trained on diverse data, enabling broad applicability for tasks ranging from content creation to technical analysis

Architectural Comparison

Efficiency and Scalability

- LLaMA 4: MoE architecture reduces inference costs by 70% compared to dense models. Scout processes 10M tokens at $0.19–$0.49 per million tokens, undercutting GPT-4o ($4.38/M tokens).

- DeepSeek: Achieves coding performance parity with 32B parameters against LLaMA 4’s 402B, demonstrating superior parameter efficiency.

- Gemini: Optimised for Google’s TPU infrastructure, enabling real-time responses but requiring significant cloud resources for full multimodal capabilities.

- ChatGPT: High operational costs due to dense architecture, though custom GPTs allow task-specific optimisations.

Multimodal Capabilities

- LLaMA 4 Maverick: Leads in image reasoning (MMMU score: 73.4) and document analysis (DocVQA: 94.4). Early fusion architecture integrates text, images, and video during pretraining.

- Gemini 2.5: Processes audio-visual data with low latency, ideal for real-time translation and video summarisation.

- ChatGPT: Relies on plugins for multimodal tasks, lagging in native image/video understanding.

- DeepSeek: Primarily text-focused, with limited multimodal support.

Performance Benchmarks

| Model | LiveCode Bench Score | Parameters | Cost Efficiency |

| DeepSeek-V3-0324 | 76.2 | 32B | 1.0x |

| Llama 4 Maverick | 43.4 | 402B | 0.3x |

| GPT-4.5 | 68.9 | ~1.8T | 0.7x |

| Gemini 2.5 | 54.1 | ~340B | 0.5x |

DeepSeek really shines when it comes to coding. It solves 76.2% of the LiveCodeBench challenges and beats Llama 4 Maverick by 32.8 points, all while using 12.5 times fewer parameters.

Users have found that Llama 4 has trouble with simple programming tasks, like coding a bouncing ball simulation, and often produces code that has syntax issues.

ChatGPT and Gemini aren’t as strong in specific coding tasks, but they do provide better support for debugging with their built-in tools.

Reasoning and Knowledge

| Model | MMLU Pro | MATH-500 | Training Data |

| Llama 4 Behemoth | 82.2 | 95.0 | 30T tokens |

| GPT-4.5 | 85.1 | 96.3 | 13T tokens |

| Gemini 2.5 Pro | 80.7 | 89.5 | 15T tokens |

| DeepSeek-R1 | 78.4 | 91.2 | 8T tokens |

GPT-4.5 is the go-to for general knowledge, scoring 85.1 on the MMLU Pro test. On the math side, Llama 4 Behemoth really stands out with a score of 95.0 on the MATH-500 test.

DeepSeek has a smaller training set, which means it might not cover as much, but it does have solid technical accuracy with a 91.2 on MATH-500.

Creative Writing

- ChatGPT: Generates nuanced narratives with adjustable tone, preferred for marketing content and storytelling.

- LLaMA 4 Maverick: Produces detailed, formal prose suitable for academic writing (rated 4.8/5 by technical users).

- Gemini: Balances creativity and conciseness, ideal for social media snippets.

- DeepSeek: Lacks stylistic flexibility; often perceived as “dry” by creative professionals.

Which AI LLM Truly Stands Out in 2025?

Each AI model has its strengths, making them fit for different jobs. **DeepSeek** is great for coding and technical tasks, offering strong performance at a low price, especially useful for debugging and algorithm design.

LLaMA 4 does well with multimodal tasks, like analysing textbooks with diagrams or medical images, and it’s good with long documents, such as legal papers. It’s also a strong choice for open-source projects where community input can make a difference.

Gemini really shines in real-time applications, like voice assistants or live translation, and it works well with multiple languages, thanks to Google’s big language databases.

Meanwhile, ChatGPT is fantastic for creative work—think content creation, storytelling, and answering all sorts of questions, though it can be more expensive.

So, the best AI model really depends on what you’re looking to do: DeepSeek for tech stuff, LLaMA 4 for research, Gemini for real-time needs, and ChatGPT for creative projects.